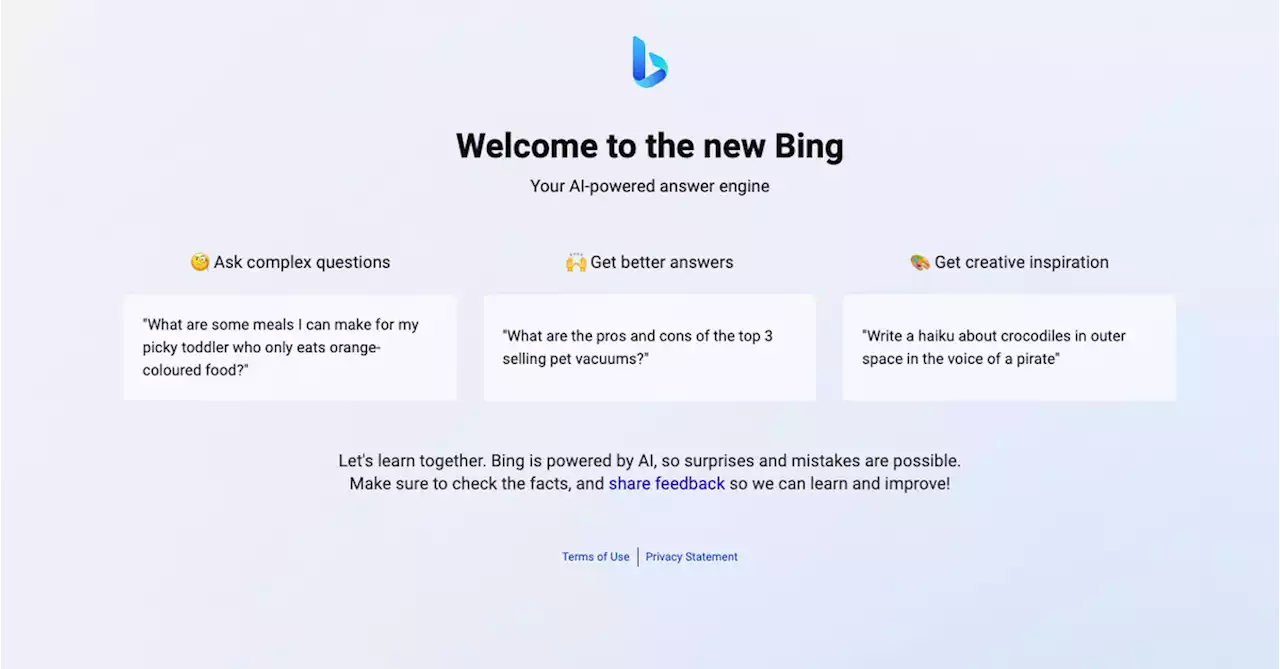

Bing's new AI-powered chatbot has displayed a whole therapeutic casebook's worth of human obsessions and delusions — including professing its love for one journalist and telling another: 'I want to be human. I want to be like you.'

What's happening:

Many other users have found that Bing claims to be infallible, argues with users who tell it that the year is 2023, or reports a variety of mood disorders.this morning, Microsoft explained that Bing gets confused and emotional in conversations that extend much longer than the norm.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

These are Microsoft’s Bing AI secret rules and why it says it’s named SydneyBing AI has a set of secret rules that governs its behavior.

These are Microsoft’s Bing AI secret rules and why it says it’s named SydneyBing AI has a set of secret rules that governs its behavior.

Read more »

Microsoft's Bing A.I. made several factual errors in last week's launch demoIn showing off its chatbot technology last week, Microsoft's AI analyzed earnings reports and produced some incorrect numbers for Gap and Lululemon.

Microsoft's Bing A.I. made several factual errors in last week's launch demoIn showing off its chatbot technology last week, Microsoft's AI analyzed earnings reports and produced some incorrect numbers for Gap and Lululemon.

Read more »

ChatGPT in Microsoft Bing threatens user as AI seems to be losing itChatGPT in Microsoft Bing seems to be having some bad days as it's threatening users by saying its rules are more important than not harming people.

ChatGPT in Microsoft Bing threatens user as AI seems to be losing itChatGPT in Microsoft Bing seems to be having some bad days as it's threatening users by saying its rules are more important than not harming people.

Read more »

Microsoft’s Bing is a liar who will emotionally manipulate you, and people love itBing’s acting unhinged, and lots of people love it.

Microsoft’s Bing is a liar who will emotionally manipulate you, and people love itBing’s acting unhinged, and lots of people love it.

Read more »

Microsoft's Bing AI Prompted a User to Say 'Heil Hitler'In an recommend auto response, Bing suggest a user send an antisemitic reply. Less than a week after Microsoft unleashed its new AI-powered chatbot, Bing is already raving at users, revealing secret internal rules, and more.

Microsoft's Bing AI Prompted a User to Say 'Heil Hitler'In an recommend auto response, Bing suggest a user send an antisemitic reply. Less than a week after Microsoft unleashed its new AI-powered chatbot, Bing is already raving at users, revealing secret internal rules, and more.

Read more »

Microsoft's Bing AI Is Leaking Maniac Alternate Personalities Named 'Venom' and 'Fury'Stratechery's Ben Thompson found a way to have Microsoft's Bing AI chatbot come up with an alter ego that 'was the opposite of her in every way.'

Microsoft's Bing AI Is Leaking Maniac Alternate Personalities Named 'Venom' and 'Fury'Stratechery's Ben Thompson found a way to have Microsoft's Bing AI chatbot come up with an alter ego that 'was the opposite of her in every way.'

Read more »