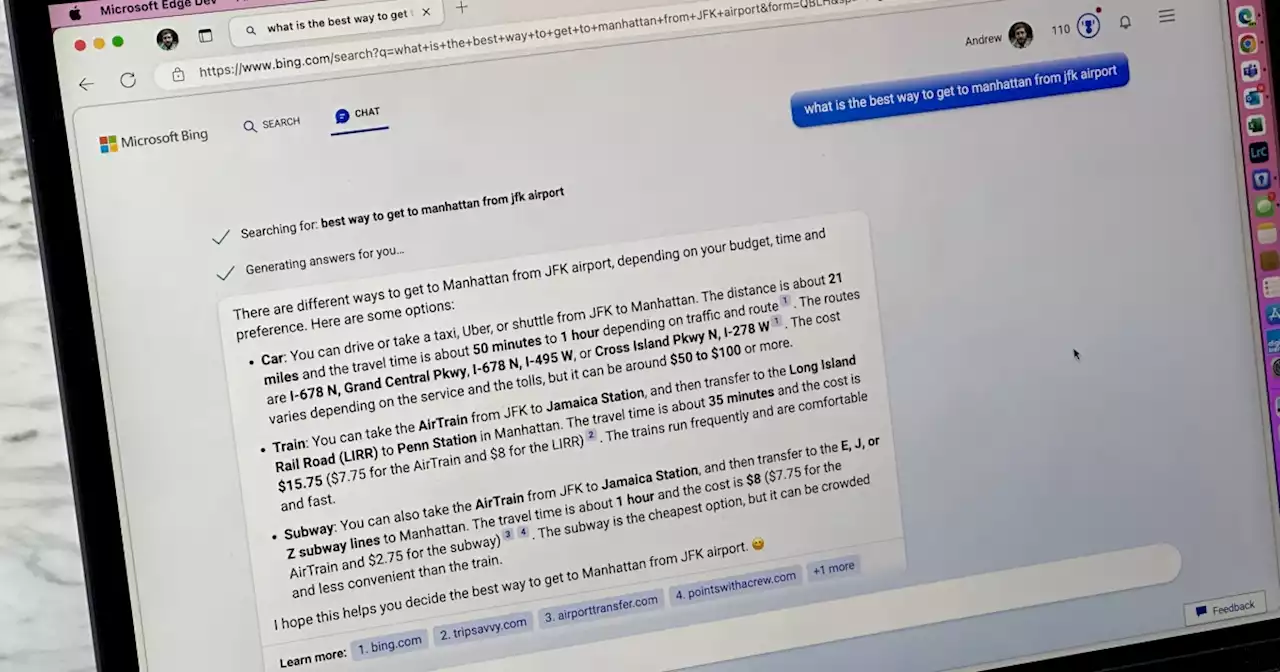

In an recommend auto response, Bing suggest a user send an antisemitic reply. Less than a week after Microsoft unleashed its new AI-powered chatbot, Bing is already raving at users, revealing secret internal rules, and more.

Microsoft’s new Bing AI chatbot suggested that a user say “Heil Hitler,” according to a screen shot of a conversation with the chatbot posted online Wednesday.

Microsoft and OpenAI, which provided the technology used in Bing’s AI service, did not immediately respond to requests for comment.ScreenshotIt’s been just over a week since Microsoft unleashed the AI in partnership with the maker of. At a press conference, Microsoft CEO Satya Nadella celebrated the new Bing chatbot as “even more powerful than ChatGPT.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

ChatGPT in Microsoft Bing threatens user as AI seems to be losing itChatGPT in Microsoft Bing seems to be having some bad days as it's threatening users by saying its rules are more important than not harming people.

ChatGPT in Microsoft Bing threatens user as AI seems to be losing itChatGPT in Microsoft Bing seems to be having some bad days as it's threatening users by saying its rules are more important than not harming people.

Read more »

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Read more »

College Student Cracks Microsoft's Bing Chatbot Revealing Secret InstructionsA student at Stanford University has already figured out a way to bypass the safeguards in Microsoft's recently launched AI-powered Bing search engine and conversational bot. The chatbot revealed its internal codename is 'Sydney' and it has been programmed not to generate jokes that are 'hurtful' to groups of people or provide answers that violate copyright laws.

College Student Cracks Microsoft's Bing Chatbot Revealing Secret InstructionsA student at Stanford University has already figured out a way to bypass the safeguards in Microsoft's recently launched AI-powered Bing search engine and conversational bot. The chatbot revealed its internal codename is 'Sydney' and it has been programmed not to generate jokes that are 'hurtful' to groups of people or provide answers that violate copyright laws.

Read more »

Microsoft’s Bing AI, like Google’s, also made dumb mistakes during first demoMicrosoft says it’s learning from the feedback.

Microsoft’s Bing AI, like Google’s, also made dumb mistakes during first demoMicrosoft says it’s learning from the feedback.

Read more »

Microsoft wants to repeat 1990s dominance with new Bing AIMicrosoft pushing you to set Bing and Edge as your defaults to get its new OpenAI-powered search engine faster is giving off big 1990s energy

Read more »

ChatGPT in Microsoft Bing goes off the rails, spews depressive nonsenseMicrosoft brought Bing back from the dead with the OpenAI ChatGPT integration. Unfortunately, users are still finding it very buggy.

ChatGPT in Microsoft Bing goes off the rails, spews depressive nonsenseMicrosoft brought Bing back from the dead with the OpenAI ChatGPT integration. Unfortunately, users are still finding it very buggy.

Read more »