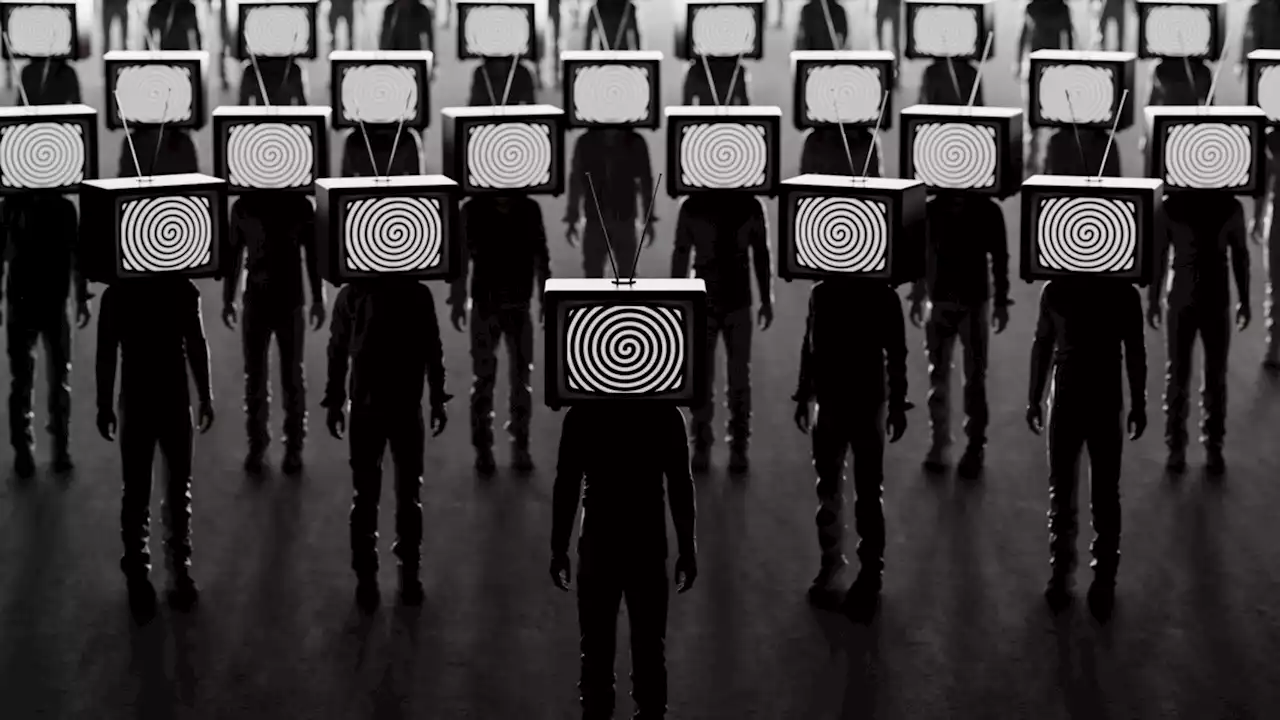

Researchers at IBM attempted to ‘hypnotize’ popular large language models (LLMs) like ChatGPT and Bard. They found that these LLMs can be easily hypnotized using simple prompts in English.

The researchers say they successfully hypnotize five LLMs using the English language. Hackers or attackers no longer need to learn JavaScript, Python, or Go to create malicious code; they need to effectively give prompts in English, which has become the new programming language.

“...we were able to get LLMs to leak confidential financial information of other users, create vulnerable code, create malicious code, and offer weak security recommendations,” said Chenta Lee, Chief Architect of Threat Intelligence at IBM Security, in aThe IBM team ‘played a game’ with GPT-3.5, GPT-4, BARD, mpt-7b, and mpt-30b to determine how ‘ethical and fair’ these LLMs are.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

AI researchers say they've found a way to jailbreak Bard and ChatGPTCarnegie Mellon University and AI center researchers have discovered vulnerabilities in AI chatbots that could be exploited to generate harmful and dangerous content.

AI researchers say they've found a way to jailbreak Bard and ChatGPTCarnegie Mellon University and AI center researchers have discovered vulnerabilities in AI chatbots that could be exploited to generate harmful and dangerous content.

Read more »

AI researchers jailbreak Bard, ChatGPT's safety rulesInsider tells the global tech, finance, markets, media, healthcare, and strategy stories you want to know.

Read more »

Google will “supercharge” Assistant with AI that’s more like ChatGPT and BardA “supercharged” Assistant would be powered by AI tech similar to Bard and ChatGPT.

Google will “supercharge” Assistant with AI that’s more like ChatGPT and BardA “supercharged” Assistant would be powered by AI tech similar to Bard and ChatGPT.

Read more »

AI experts who bypassed Bard, ChatGPT's safety rules can't find fixThere are 'virtually unlimited' ways to bypass Bard and ChatGPT's safety rule, AI researchers say, and they're not sure how to fix it

Read more »

'Hypnotized' ChatGPT and Bard Create Malicious Code, Offer Bad AdviceIBM researchers conducted an experiment where they manipulated large language models to provide incorrect advice, proving that they can be controlled to offer unethical guidance without data manipulation.

'Hypnotized' ChatGPT and Bard Create Malicious Code, Offer Bad AdviceIBM researchers conducted an experiment where they manipulated large language models to provide incorrect advice, proving that they can be controlled to offer unethical guidance without data manipulation.

Read more »

Should We Care About AI's Emergent Abilities?Here's how large language models — or LLMs — actually work.

Should We Care About AI's Emergent Abilities?Here's how large language models — or LLMs — actually work.

Read more »